Summery - Every kubernets service has different endpoints for the same service. Four scenarios are outlined that explain when to use what endpoint, depending on if the client is deployed on the kubernetes cluster or externally and/or if an API manager is used.

Introduction

We use Openshift as our Kubernetes implementation.

Before we talk about calling a service, let’s first talk about deploying a service. Let’s say we have created a RESTful service, and we’d like to deploy it to Kubernetes. The easiest way to do this is using the fabric8 maven plugin in your pom. Along with the https://github.com/rhuss/docker-maven-plugin [docker maven plugin], it allows create a docker container running your service with just setting a few configuration properties. For some ready to go examples see the https://github.com/fabric8io/ipaas-quickstarts [Fabric8 iPaas quickstarts]. We’re not going to talk about the specifics, but at a high level what happens is that your service is wrapped inside a Docker image. This image is uploaded under the fabric8 user on dockerhub. Note that the docker user is configured in the pom and if you’d prefer to use a private docker registry you can set export DOCKER_REGISTRY=172.30.17.90:5000/TCP. The fabric8 maven plugin also creates the configuration artifacts needed by Kubernetes which are a 'template', a 'service' and a 'replication-controler' configuration files formatted in JSON. The template references the docker image and when the template is deployed to kubernetes, kubernetes can instantiate a new instance of the service by instructing Docker to start up a new container based on the docker image. In most quickstarts you can do this all at once using mvn -Pf8-local-deploy. So let’s assume you managed all of this and you can see your new shiny service running in the Fabric8 console and now you want clients to call it. Depending on wether the client is part of the kubernetes domain or not and if you want to use the apiman gateway for this there are a few different scenarios.

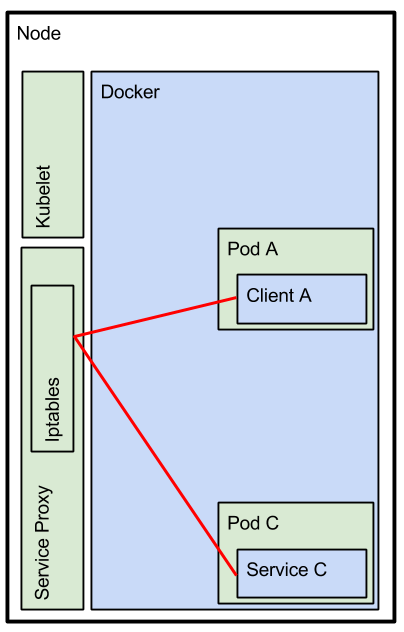

Scenario 1 - K8s Client to K8s Service

In scenario 1 client A is a service on kubernetes and you want to invoke another service in same kubernetes domain. This scenario is depicted in figure 1.

The client service can use the system paramaters set in the docker container to find the host and port settings of Service C in the kubernetes domain. When invoking this service endpoint the service proxy connects to a one of the pods running behind the service. By default the service proxy tries to find local pods first (local meaning on the same node). The fragment below shows how to obtain the host and port setting of Service C

String serviceName = "C"; String host = KubernetesServices.serviceToHostOrBlank(serviceName); String port = KubernetesServices.serviceToPortOrBlank(serviceName);

Or if you prefer a canonical name instead of the IP address, then do a DNS lookup, which returns a string formatted like

<service-name>.<k8s-namespace>.svc.cluster.local (or <service-name>.<k8s-namespace> for short), use

InetAddress initAddress = InetAddress.getByName("C");

String host = initAddress.getCanonicalHostName();

Note that the IP address returned, and the canonical address of <service-name>.<k8s-domain> can only be used on the kubernetes cluster and can not be used externally. If Client A is external to the Kubernetes cluster then keep reading scenario 3, but before we get there let’s make Service C managed using Apiman.

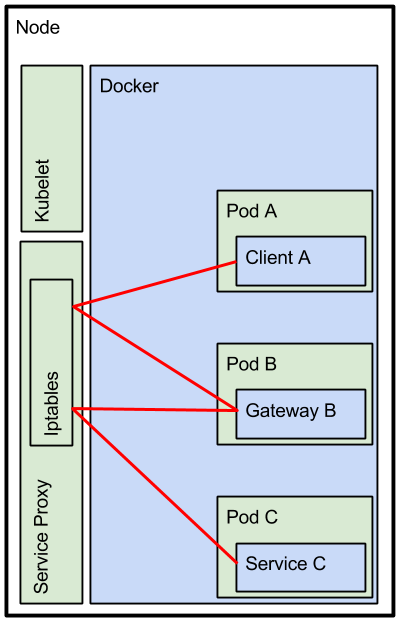

Scenario 2 - K8s Client to K8s Service via the Apiman Gateway

In scenario 2 we are going to add the APIMan Gateway in the mix. If we want Service C to be a managed endpoint, then we should import it into Apiman and publish it to the Gateway. The Gateway will then enforce all the policies you want to have enforced. Please see the Fabric8 docs for more info on API management. Once you /publish/ Service C to the APIMan gateway the APIMan UI will display an 'Endpoint` tab on the Service page. This gateway endpoint which takes the format of

http://apiman-gateway.<k8s-namespace>/gateway/<org-name>/<service-name>/<service-version>

Now Client A should use this gateway endpoint. The gateway will take care of enforcing the configured policies and makes the call to Service C. Note that the gateway makes a call to the Service Proxy, and the Service Proxy decides to which pod the request is dispatched. By default it takes 'local' pods first.

The fragment below shows how to obtain the host and port setting of Service C

String serviceName = "apiman-gateway"; String host = KubernetesServices.serviceToHostOrBlank(serviceName); String port = KubernetesServices.serviceToPortOrBlank(serviceName);

Or if you prefer a canonical name instead of the IP address, then do a DNS lookup, which returns a string formatted like

<service-name>.<k8s-namespace>.svc.cluster.local (or <service-name>.<k8s-namespace> for short), use

InetAddress initAddress = InetAddress.getByName("C");

String host = initAddress.getCanonicalHostName();

And the full end point url the client should use is http://<host>:<port>/gateway/<org-name>/C/1.0, where C is the name of Service C and 1.0 is the version under which this service is published in Apiman.

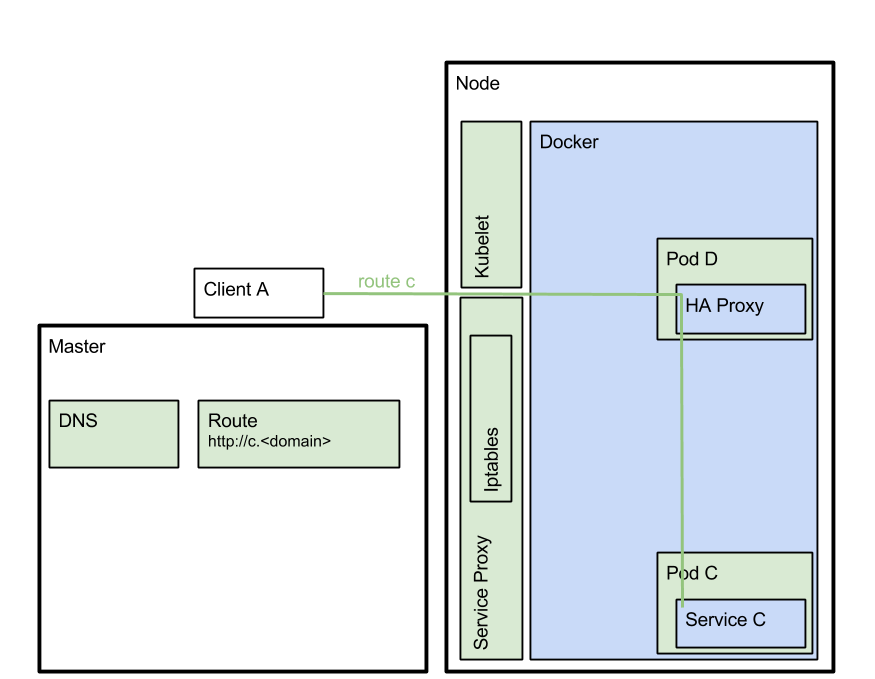

Scenario 3 - External Client to K8s

In scenario 3 client A is a service external to Kubernetes and you want to invoke service C on a kubernetes cluster. This scenario is depicted in figure 2.

This scenario requires a router and a route. On OpenShift the router of choice is HAProxy, and when you create a route it creates a nice canonical address: http://<servicename>.<k8s-domain>;. See also the OpenShift docs for the gory details. In order to get the DNS to resolve the canonical address you will need Landrush, or dnsmasq or you can add a zone file for your k8s-domain to your DNS server. In my case, my k8s-domain is stam.f8 and I use the following

stam.f8. IN A 192.168.1.22 ; f8 *.stam.f8. IN A 192.168.1.22 ; f8

Where 192.168.1.22 is the IP address of the Kubernetes Node that runs the HAProxy router. HAProxy listens to the host’s 0.0.0.0 network interfaces. You can add entries for each node that runs HAProxy and client calls will roundrobin over the nodes. Note that each HAProxy pod still load balances across all pods in the k8s-cluster (with some affinity rules). In this scenario no lookup is required and the URL for the endpoint is predetermined and of format:

http://<service-name>.<k8s-domain>/

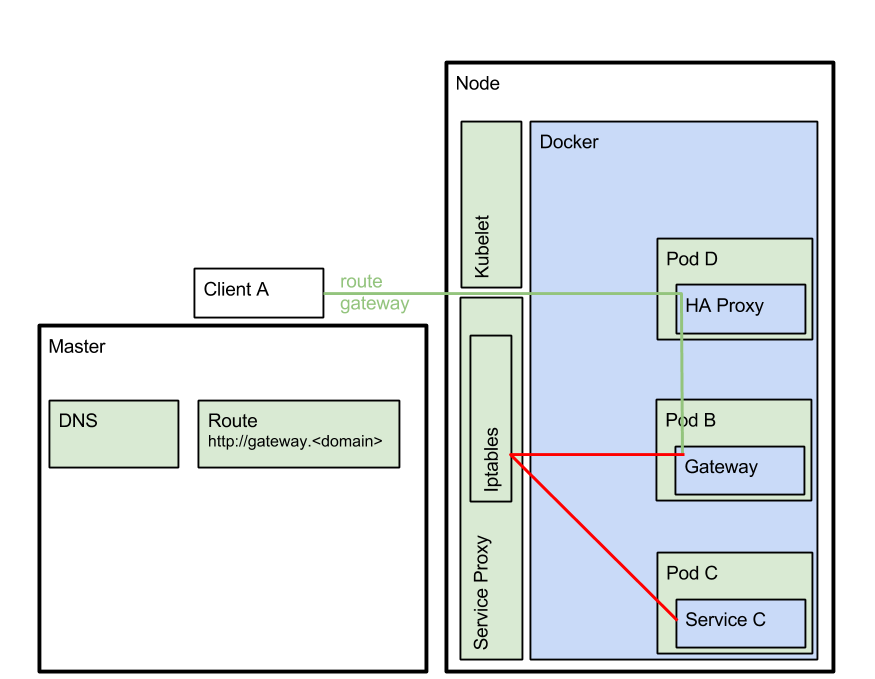

Scanario 4 - External Client to K8s Service via the Apiman Gateway

In the final scenario we have Client A external to the Kubernetes Cluster, and we want to make a call to Service C via the Apiman gateway. This means that we need to use the apiman-gateway 'Route' on HAProxy. HAProxy will then invoke the gateway and the gateway looks at the path part of the request URL to figure out the call needs to go the Service C (on the given organization).

In this scenario no lookup is required and the URL for the endpoint is predetermined and of format:

http://apiman-gateway.<k8s-domain>/gateway/<orgName>/<serviceName>/1.0

Note that if you want to force external clients over the gateway then it would make sense to remove the direct route to Service C from HAProxy.

Conclusion

Four scenarios are identified:

-

1. Scenario 1 - K8s Client to K8s Service

-

2. Scenario 2 - K8s Client to K8s Service via the Apiman Gateway

-

3. Scenario 3 - External Client to K8s

-

4. Scanario 4 - External Client to K8s Service via the Apiman Gateway

In the table below we use Kubernetes domain of stam.f8, Kubernetes namespace of default, org-name of kurtOrg, service-name of c and service-version of 1.0. The service port of the gateway is 7777 and the service port of service c is 8080.

| Scenario | URL format | Example | |

|---|---|---|---|

1 |

|||

2 |

http://apiman-gateway.<k8s-namespace>:<gwPort>/gateway/<org-name>/<service-name>/service-version |

||

3 |

|||

4 |

http://apiman-gateway.<k8s-domain>/gateway/<org-name>/<service-name>/service-version |

Additional Resources

-

[1] Openshift Routers: https://docs.openshift.org/latest/architecture/core_concepts/routes.html#available-router-plug-ins

-

[2] Deploy a Router: https://docs.openshift.org/latest/install_config/install/deploy_router.html#creating-the-router-service-account

-

[3] https://docs.openshift.org/latest/admin_guide/router.html